In our previous post, we explored the fascinating differences between human object recognition and artificial intelligence (AI) capabilities. Humans can recognise objects quickly and accurately, leveraging complex neural processes and contextual understanding.

In contrast, AI, despite its remarkable advancements, still faces challenges in matching human-like perception. This blog post will explore how insights from human neural processes can enhance AI training, ultimately bridging the perception gap and leading to more sophisticated and human-like object recognition capabilities in AI systems. Additionally, we will highlight the potential applications of these advancements, particularly in critical areas such as exci’s AI-driven wildfire/bushfire detection technology.

The Human Brain’s Object Recognition Capabilities

Understanding the human brain’s object recognition processes provides valuable insights for improving AI. Let’s revisit some of the key aspects of human perception:

- Neural Pathways and Hierarchical Processing: The human visual system processes visual information through a hierarchical structure. The primary visual cortex (V1) handles basic visual features such as edges and orientations. As information progresses through higher visual areas (V2, V4, IT cortex), more complex features and object representations are formed. This hierarchical processing allows humans to recognise objects in various contexts and under different conditions.

- Contextual Understanding and Generalisation: Humans excel at integrating contextual information and generalising from limited examples. This ability enables us to recognise objects in diverse environments and adapt to new situations with minimal effort.

- Perceptual Attributes and Object Classification: Recent research has identified 49 distinct properties that the human brain uses to recognise and classify objects, including attributes such as colour, shape, size, and texture. This extensive representation helps humans discern objects quickly and accurately, even when partially obscured or presented in unusual orientations.

Case Study: Visual Cortex Inspired AI Training

In a recent study, researchers investigated whether a feedforward convolutional network (e.g., VGG-16), initially trained for object recognition, exhibits qualitative patterns akin to human perception. In such a feed-forward system, data flows linearly from input to output without recursive feedback loops. To validate their findings, the researchers conducted control experiments using a randomly initialised VGG-16 network, ensuring observed properties stemmed from training rather than inherent architecture. Their study yielded consistent results across various VGG-16 instances trained with different random seeds and other feedforward deep networks of varying depths and architectures.

They categorised identified perceptual and neural properties from visual psychology and neuroscience into five broad groups, examining their presence in deep neural networks (DNNs):

Object or Scene Statistics

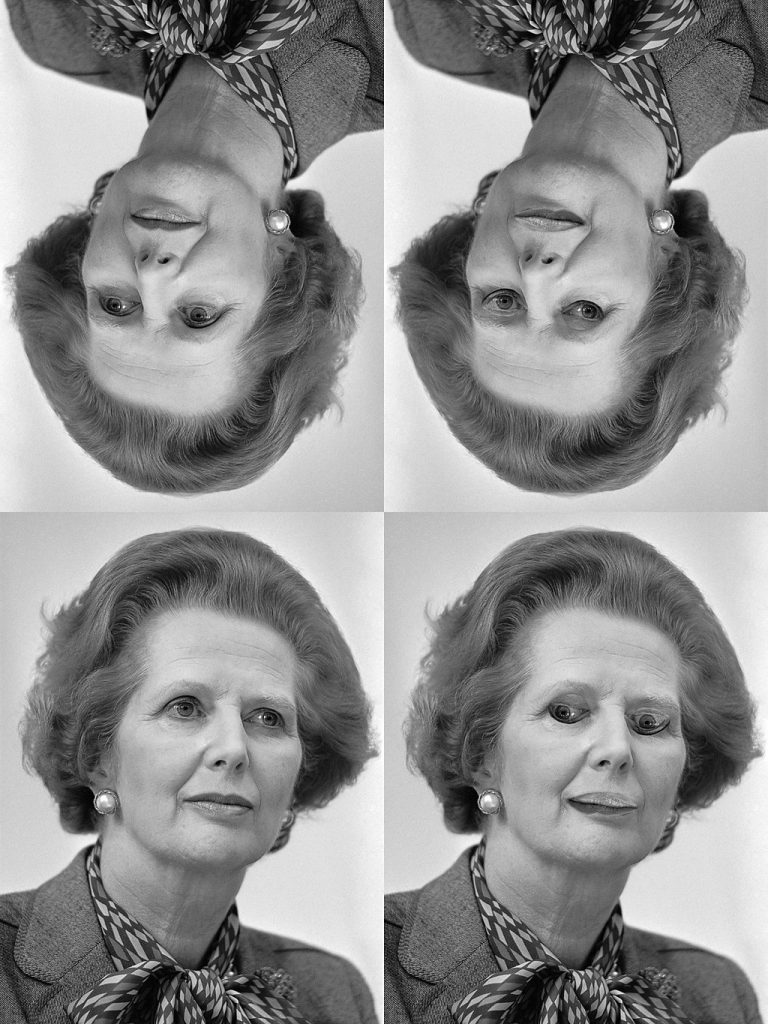

- Thatcher Effect: The Thatcher effect is a phenomenon in which distortions in upright faces become easier to recognise than in inverted faces. This occurs because our brains are accustomed to processing faces in their upright orientation.

Photography: Rob BogaertsImage manipulation: Phonebox, CC0, via Wikimedia Commons

- Mirror Confusion: Difficulty distinguishing mirrored images. Humans perceive vertical mirror reflections as more similar than horizontal ones.

- Object–Scene Incongruence: Detecting inconsistencies between objects and their surrounding scenes. Human perception of objects is influenced by the context in which they appear. Recognition accuracy drops when an object is placed in an incongruent context (e.g., a hatchet in a supermarket).

Tuning Properties of Neurons in Visual Cortex

- Multiple Object Tuning: Neurons’ responses to multiple objects. Multiple Object Normalisation, observed in the high-level visual cortex, involves a neuron responding to multiple objects by averaging its responses to each individual object. Imagine you have a room with several lamps. Each lamp has a different brightness level. If you turn on one lamp, you can measure how bright the room gets. If you turn on a second lamp, instead of just adding the brightness of both lamps directly, you might think of the room’s brightness as the average of the two lamps’ brightness levels.

- Correlated Sparseness: Correlated sparseness means that a neuron that is selective and responsive to a few specific stimuli in one dimension (e.g., shapes) will also be selective and responsive in a similar way to stimuli in another dimension (e.g., textures).

Relations Between Object Features

- Weber’s Law: Sensitivity to relative changes in stimulus intensity. Weber’s law states that the sensitivity to noticeable changes in any sensory quantity is proportional to the baseline level used for comparison. According to Weber’s law, if you compare two lines, the noticeable difference in their lengths is relative. For example, noticing the difference between 10 cm and 11 cm (a 10% increase) is similar to noticing the difference between 20 cm and 22 cm (also a 10% increase), even though the absolute difference is larger in the latter case.

- Relative Size: Perception of size differences between objects. In the brain, some neurons respond more similarly when two objects in a display change size proportionally (e.g., both getting larger or smaller at the same rate) than when they change size independently (e.g., one getting larger while the other getting smaller).

- Surface Invariance: Consistency in perceiving surface characteristics despite variations. Surface invariance refers to the ability of neurons to respond similarly when both the pattern (like stripes or dots) and the surface (like a sphere or cube) undergo congruent changes in curvature or tilt. This suggests that the neurons process the pattern and surface as integrated features.

3D Shape and Occlusions

Humans have a remarkable ability to discern differences in 3D structures, such as variations in cuboid shapes, more easily than differences in two-dimensional (2D) features alone, such as variations in Y-shaped images.

Additionally, our visual system adeptly handles occlusion relations between objects, which complicates identifying a target among occluded distractors compared to recognizing simpler 2D feature differences. This suggests that our visual system processes both occluded and unoccluded displays similarly

Object Parts and Global Structure

Humans naturally process and recognise objects by their parts but find it more challenging to search for an object broken into its natural parts than unnatural ones. This indicates a strong capability in recognising and understanding the natural structure and decomposition of objects.

Summary of Key Findings

Properties Present in Randomly Initialised Networks

Randomly initialised deep neural networks exhibit several interesting properties even before any training:

- Correlated Sparseness: Units in the network show correlated sparseness, meaning their activation patterns across stimuli are similar.

- Relative Size Encoding: The network encodes relative size information, reflecting changes in object proportions.

- Global Advantage: There is a bias towards processing global shape information over local details.

These properties suggest that the architecture of deep networks inherently supports certain features, even without specific training tasks. This aligns with findings that randomly initialised networks can produce useful representations, which have been applied in predicting early human visual responses.

Properties Present in Deep Networks Trained for Object Recognition

After training the AI for object recognition, several additional properties emerged:

- Weber’s Law: The network exhibits sensitivity to relative changes in sensory quantities, such as length or intensity, which aids in invariant object recognition.

- Thatcher Effect, Mirror Confusion, Scene Incongruence: The network shows sensitivity to contextual regularities and scene incongruence, similar to human perception.

- Multiple Object Normalisation: Divisive normalisation in the random network was trivial and disappeared after network training, suggesting deep networks acquire multiple object normalisation and image selectivity only post-training.

- Over-Reliance on Scene Context: Deep networks may rely heavily on scene context for object recognition, often more than humans, as indicated by significant drops in accuracy in incongruent scenes.

These findings suggest that object recognition training refines the network’s ability to handle complex visual contexts and invariant features necessary for robust recognition.

Properties Absent in Deep Networks

However, certain properties are notably absent in both randomly initialised and trained deep networks:

- 3D Processing: Networks do not exhibit sensitivity to 3D shapes or orientations, suggesting a limitation in perceiving spatial depth.

- Occlusions and Surface Invariance: There is no inherent ability to distinguish between occluded and unoccluded objects, or to maintain consistency in shape under different surface conditions.

- Part Processing and Global Advantage: Deep networks do not show systematic part decomposition, regardless of training.

These absences indicate that additional task demands or modifications to the network architecture might be necessary to develop these capabilities.

Intermediate Representations and Layer-Specific Effects

- Transient Properties in Intermediate Layers: Some properties transiently emerge in the network’s intermediate layers but diminish in the final layers crucial for classification.

- Role of Intermediate Computations: These intermediate representations may serve as transitional stages for creating final object representations required for classification tasks.

- Comparison to Brain Pathways: Analogous to brain processing, where early, intermediate, and later stages in the ventral pathway correspond to early, middle, and later layers in neural networks, studying these intermediate representations can provide insights into visual processing stages.

Implications for AI Development

These findings suggest that while AI can replicate some aspects of human perception, additional task demands or modifications to network architecture are necessary to develop more advanced capabilities. By leveraging insights from human neural processes and focusing on the following areas, AI can achieve more sophisticated object recognition:

- Intermediate Representations: Understanding transient properties in intermediate layers of neural networks can provide insights into visual processing stages, similar to how early, intermediate, and later stages in the ventral pathway correspond to visual processing in the human brain.

- Task-Specific Training: Designing AI models with specific tasks in mind and ensuring they are exposed to diverse, high-quality datasets can enhance their perceptual abilities.

- Neural Network Architecture: Exploring novel architectures and training methods that emulate human neural properties can lead to more robust and human-like AI systems.

Conclusion

In this blog post, we explored applying insights from human neural processes to enhance AI training, closing the perception gap between humans and machines. By integrating hierarchical feature learning, perceptual attributes, contextual understanding, and generalisation capabilities, AI can achieve more sophisticated and human-like object recognition.

In our next post, we will expand on these concepts by focusing on training AI for wildfire/bushfire detection. We’ll emphasise the critical role of large, high-quality datasets in this domain and showcase the impressive advancements made possible by exci’s advanced AI-driven wildfire/bushfire detection technology.

If you missed our first Blog post, here is the link:

Unlocking Object Recognition: How Toddlers and AI See the World Differently

by Gabrielle Tylor

exci – The Smoke Alarm for the Bush

AI-powered Wildfire/Bushfire Detection Technology

3 July 2024